(from chapter for Computational Surgery and Dual Training, Springer, in press, Fig 1)

We seek to better understand brain function and dysfunction. A principal difficulty in this endeavor is that the research entails everything from behavior of molecules, on up to behavior of people. Not only must this information be gathered, it must also be linked together to provide explanation and prediction. Computational neuroscience is developing a set of concepts and techniques to provide these links for findings and ideas arising from disparate types of investigation.

In most ways, the brain is not much like the computer. Yet, both as a metaphor for aspects of brain function, and as a tool for exploring brain function, the computer is an enormous aid. New, more precise, concepts of information and memory have grown out of tools applied to telecommunication and data processing. These tools and concepts are useful in our thinking about brain processes, thinking about thinking. Most importantly, computer models, simulations, allow hypotheses to be tested explicitly, whether the high-level hypotheses of cognitive neuroscience or the low-level hypotheses of membrane and channel biophysics.

Application projects are aimed at the problems of understanding brain diseases, particularly epilepsy, stroke and schizophrenia. We are trying to extend the notion of rational phamacotherapeutics to permit development of new drug therapies or, in future, genetic interventions, based on predictions of efficacy from simulation results. We hypothesize that both epilepsy and schizophrenia, as well as Parkinson disease (and perhaps to a lesser extent Alzheimer disease and autism) manifest via abnormalities in neurodynamics which can be modeled. Once modeled, interventions can be designed that can reverse these abnormal dynamics. Often these intervetions will be non-obvious -- i.e. they will target a receptor or protein different from that whose abnormality produced the pathology.

We are also interested in ways that brain disorders can offer insights into brain function that are entirely missed when considering the brain as a logic box, the traditional viewpoint of artificial intelligence and of much philosophical inquiry. Although computers are now able to do remarkably well on tasks as diverse as chess, Jeopardy and facial recognition, it is clear that they do not do these things as humans do them. Clues to brain function will be found in the remarkable breakdown modes seen: the bizarre ideation of schizophrenia, and the remarkable perceptual and logical lacunes of seen in hemineglect and Korsakoff syndrome.

It is often said that a highly developed neocortex distinguishes humans from other animals. Functional magnetic resonance imaging (fMRI) and positron emission tomography (PET) have made marvelous strides in localizing certain functions to specific neocortical areas: specific language, visual, auditory, cognitive functionality. However nothing in this high-level analysis tells us anything about how the neocortex actually goes about processing information or even generating its particular oscillation. This is what we endeavor to better understand through moderately-detailed simulations of neocortex which include wiring of the 6 layers of cortex utilizing highly simplified neurons.

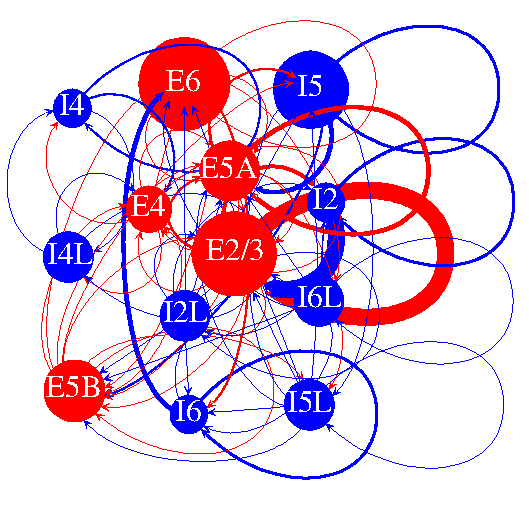

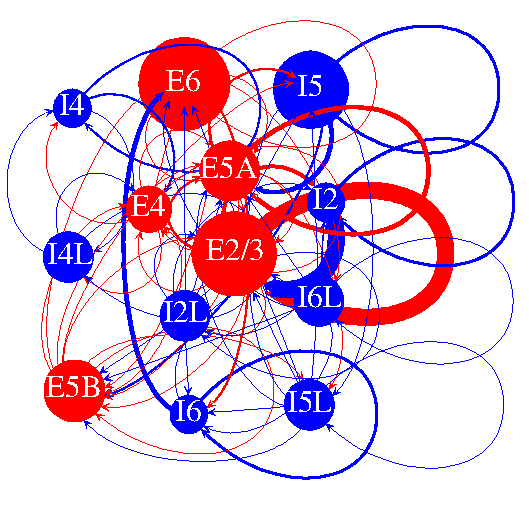

Geometrically -- as determined by graph theoretic analysis -- Layer 2/3 of neocortex seems to be a critical center, based both on the number of cells and on the strength of connections to other areas. Here we show this central position by using an algorithm that shows a layers connective-centrality as centrality of position. Excitatory (E) cells and connections are shown in red and inhibitory in blue. The layer number is given next to the E or I. The size of the circle is the size of the population in that layer and the width of the lines gives the strength of a projection.

That these networks can produce seizures is not surprising -- in neuronal networks, flat-line (dead brain)and seizure are the easiest dynamics to produce. Producing activity similar to that seen in the living, thinking brain is harder. In particular it is difficult to obtain the observed contrast between low firing rates in individual cells (often less than 1 per second -- 1 Hz -- for excitatory cells) with the much higher rates of population activity (ranging from 1 Hz up to greater than 100 Hz). Somehow these very slow units are organized in a way that permits them to work together to produce fast frequencies as an emergent property. By arranging the neurons as described above, we have been able to capture these dynamics, although it still is not entirely clear how these dynamics emerge. In the following figure, we compare the oscillatory relations from a real brain (left) with our simulation (right). Both of these show similar cross-frequency peaks, demonstrating prominent coupling between frequencies; note the similarity of location of the red spots which indicate strong comodulation.

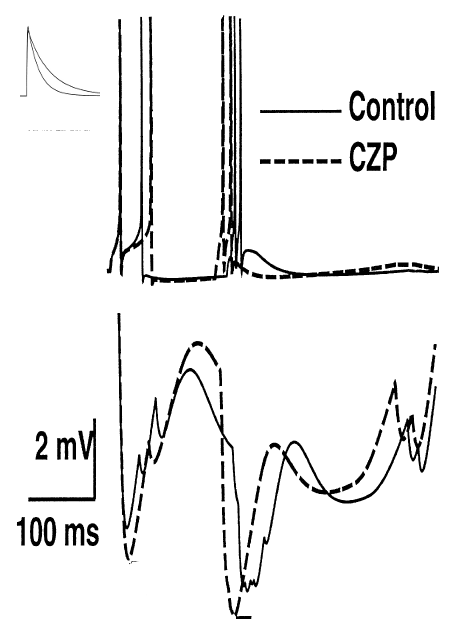

Epilepsy has been a major focus of translation computational neuroscience due to the relative simplicity of its neurodynamics. The large amplitude oscillations that characterize the electroencephalogram (EEG) during a seizure represent the coordinated activity of large numbers of neurons that during normal activity would be separately coordinated in multiple independent groups. We have looked at both single cell alterations and network alterations that could produce seizures. Our overall effort is to connect specific abnormalities and specific treatments that operate at the molecular scale of receptors and ion channels with the network scale of generation of concerted activity as seizures. Often we find that relatively small changes are amplified via cellular and network synergies to produce major changes in activity. In the following figure an increase in the duration of the GABAA conductance duration increase (upper left inset) with the drug clonazepam leads to network in a 50-cell network: IPSP changes (below) are associated with changes in spike firing times and patterns (above).

Following brain damage, whether from trauma or stroke, rehabilitation is limited due to the absence of the lost regions of brain. By picking up signals from remaining regions, we can use brain simulation to replace the missing areas. The focus at present is on sensorimotor integration where close coordination is required to permit haptic feedback to modify motor commands for tasks such as grasping and manipulating objects.

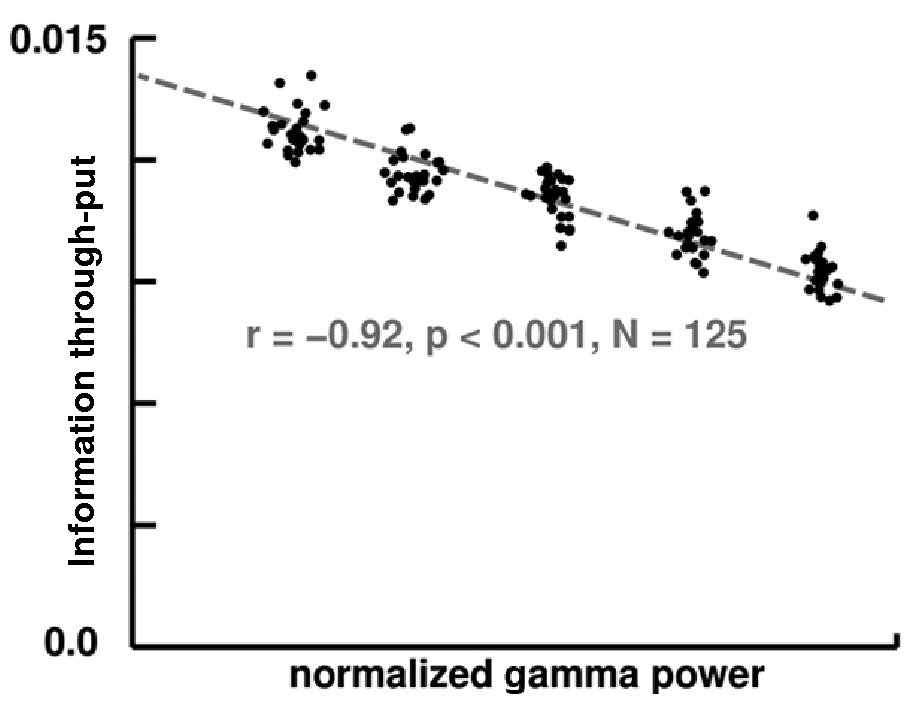

Above we suggested that human neocortex is special -- human archicortex is also special. The hippocampus (and allied areas - subiculum, entorhinal cortex) are special too. We have remarkable memory for episodes in our lives that are likely unrivaled, even by elephants which proverbially never forget (but alas can never tell us what they have remembered). The hippocampus is a focus of our modeling with a particular emphasis on alterations in dynamics that might lead to schizophrenia. We are looking at alterations in information flow contingent on the dynamical changes identified in schizophrenic patients and in animal models of schizophrenia. One hypothesis is that activity in the psychotic brain might paradoxically be too organized. In the following figure we demonstrate in silico that augmented gamma (a sign of excessive neural coordination) could be associated with reduced information flow-through. 125 separate simulations are done in order to show that similar results can be found despite randomized differences in wiring and driving inputs.

We have developed packages for doing high-capacity data-mining of both physiological and simulated signals. Data files from both experimental and modeling techniques can run to tens of gigabytes or more, making it unfeasible to view all of the data directly. Instead algorithms are developed to scan through thousands or millions of recordings to find those of interest or to generate scatter-plots or other graphical devices to view everything at once through some simplifying "lens."

Data-mining is also called KDD which means knowledge discovery and data-mining. The phrase "knowledge discovery" helps to explicate how these procedures differ from traditional methods of data reduction using statistical methods. In statistics, one has a prior hypothesis, sometimes well-founded and sometimes not, regarding the structure of the data. Often, for example, data is assumed to follow a normal distribution and can therefore be described by the Gaussian parameters of mean and standard deviation. By contrast KDD proceeds via explorations with no a priori assumptions. Instead in KDD, the investigator generates hypotheses quickly on the fly which then can either be supported or discarded through further exploration. KDD is sometimes denigrated as being "fishing" for random facts that are pulled up randomly rather than being tracked and hunted down. Perhaps it is somewhere in between, fishing with one of those new-fangled sonars that allow one to quickly identify the location and approximate composition of a school of fish.

The brain is an example of a non-stationary system -- one where the signals occurring now will never be repeated. If one sees the same image 100 times, one doesn't see it each time in quite the same way. If nothing else, ones response to the image is conditioned by the fact that it has been seen before -- it either gets boring so that ones mind (and brain) wanders, or one finds new facets of the image to focus on. Although there will clearly be some common repeated aspects of brain state with repetitive viewing, the most interesting, and revealing, aspects of brain states will be thoese that change. The brain isn't a passive system but instead is always actively correlating and recombining thoughts, memories, and sense perceptions.

For these reasons and others, we are generally skeptical about the common practice of averaging brain signals. As above, averaging represents a set of statistical assumptions - one of which is stationarity.